Lessons learned from fighting phishers

Anna’s new iPhone

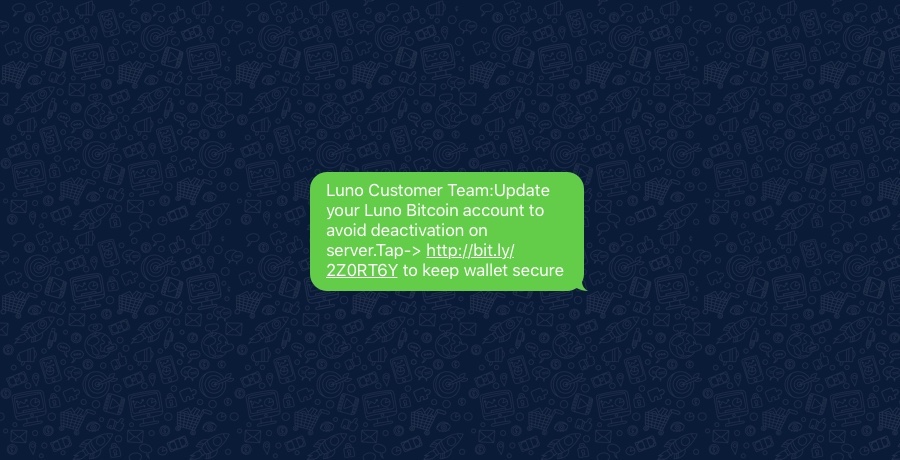

Anna got a new iPhone and, like most other people, she is ecstatic to unbox and start playing around with it. While setting it up, she receives a text message asking her to update her Luno details. While drenched in happy hormones, her brain does a quick calculation on the safety of the message – all seems legitimate.

Her brain instructs her to tap on the link, taking her to “Luno’s website”, where she enters her email address and password to sign in.

Exactly 9 minutes later, she receives a call from someone at Luno, asking her to authorise a transaction. It’s at this point that she realises she “screwed up” – her brain lied to her and the text message wasn’t legit.

Anna got phished.

According to all standards, Anna isn’t your “typical candidate” to fall for a phishing text. She’s a young, smart woman working as a procurement specialist at an up-and-coming startup. She sincerely thought that she’d never become a phishing victim. But she did.

Human biology worked against her, and she almost lost all of her crypto.

The thing is, it doesn’t matter whether she’s young or a woman or smart – what matters is that she’s human and experiences the same emotions all of us experience when we use products daily.

So the vital question is this: how do you design for people whose emotions are as volatile and unpredictable as the price of Bitcoin?

This is what we’ll explore in this post.

We’ll take a look at how we help keep people’s money safer at Luno by exploring:

- The importance of understanding the human brain

- How we get to know people better

- The magic of invisible interfaces

- Great dev and design collaboration

Let’s dive in!

1. What’s inside the human brain

To understand why Anna got phished, I’ll use the analogy of a radar – a system that detects objects around it. Every human has a radar to detect deception and its primary job is to let you know whether something is legitimate or not. The problem with this radar system though is that it’s highly dependent on things like emotional intelligence, cognitive motivation, personality, and mood.

Sex, social, and “feel good” hormones decrease this radar’s deception detection ability whereas stress hormones, on the other hand, increases this ability. So, when you’re happy, for example, you are less likely to detect deceptive messages.

Going back to Anna’s story, this is one of the primary reasons she was vulnerable to this attack. Because she was so excited about her new iPhone, her brain flooded her body with “feel good” hormones, instantly lowering her ability to detect the untruthfulness of the deceptive phishing text message.

So why does this matter? Regardless of the customer segment we’re designing for, they are all humans who have emotions that can skyrocket or plummet like the price of cryptocurrencies, altering their experience of the product.

Being aware of this gives words like “user experience”, “human factor design”, and “usability” an entirely new dimension, helping us design a safer experience at Luno.

But how do we get to know humans, just like you, better?

2. Channels to the human brain

We love getting people into the office to understand their individual stories better; that’s how we got to know Anna. But besides doing traditional customer research, we’ve also found app store reviews and our ticketing system very helpful to walk around in the mind of our customers (sometimes we do the moonwalk as well).

App Store Reviews

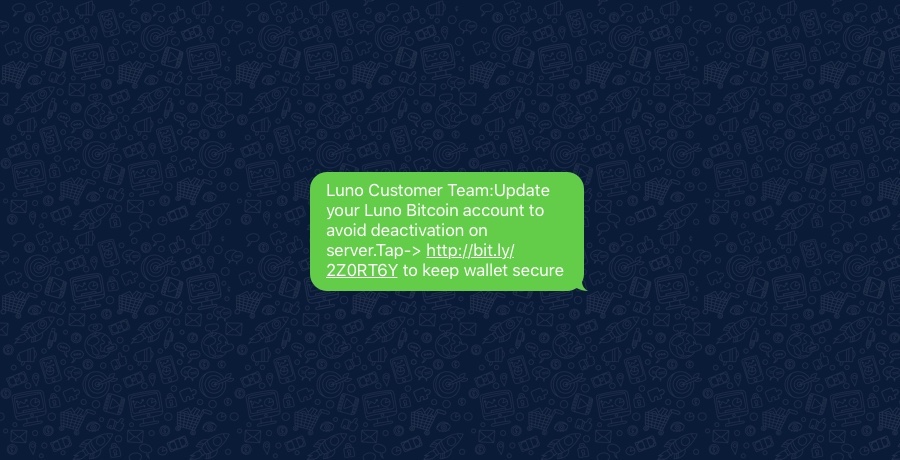

I’ve started to make it a habit to scour our app store reviews to better understand how our customers think and talk. We have a dedicated Slack channel that aggregates our app store reviews, and it has several benefits:

- It makes feedback a lot more accessible to the whole team

- It allows us to quickly and easily go through the latest reviews each morning

- It facilitates chatting about specific reviews, allowing us to include the relevant team members in one channel

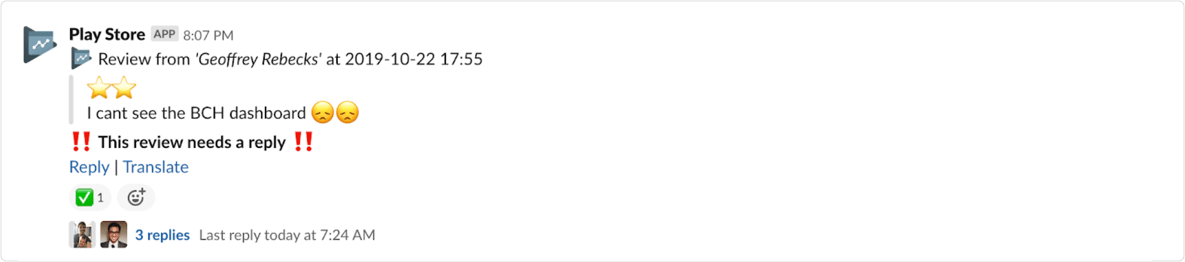

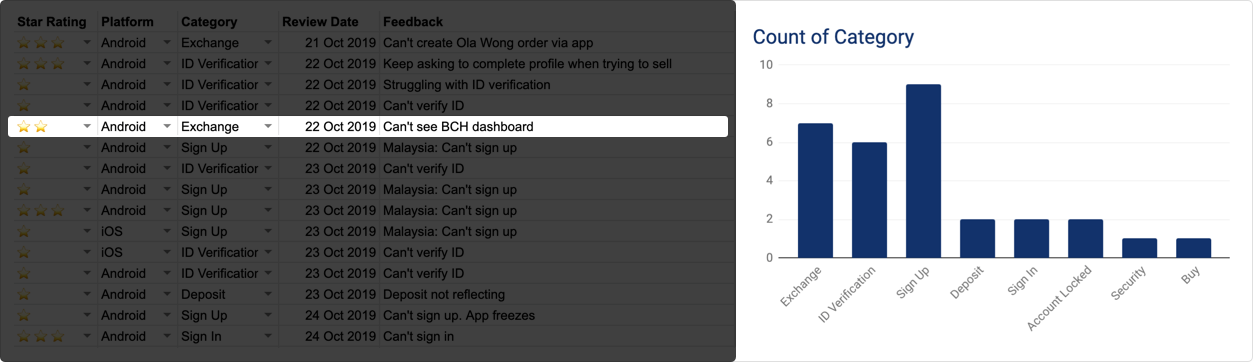

We also created a Google sheet where we document and tag the reviews to help us better quantify the data.

After the launch of our new app, for example, we wanted to validate whether the majority of low-star reviews came from our more advanced customer base. The sheet helped us in a number of ways:

- We were able to start picking up trends, like determining which changes caused the most significant knee-jerk reaction from our customers

- To measure sentiment changes as we addressed customer feedback

- By allowing us to see the correlation between app store reviews and changes we make to the product

Customer support tickets and incidents

Our team, namely product, also regularly go through customer support tickets and incident reports to gain similar insights. These sometimes allow us to go a bit deeper into the mind of the customer on how they got phished or scammed, if that were the case.

We can often pick up on things such as which vector an attacker frequently uses, the messages they craft, and the emotions a customer is expressing at various stages.

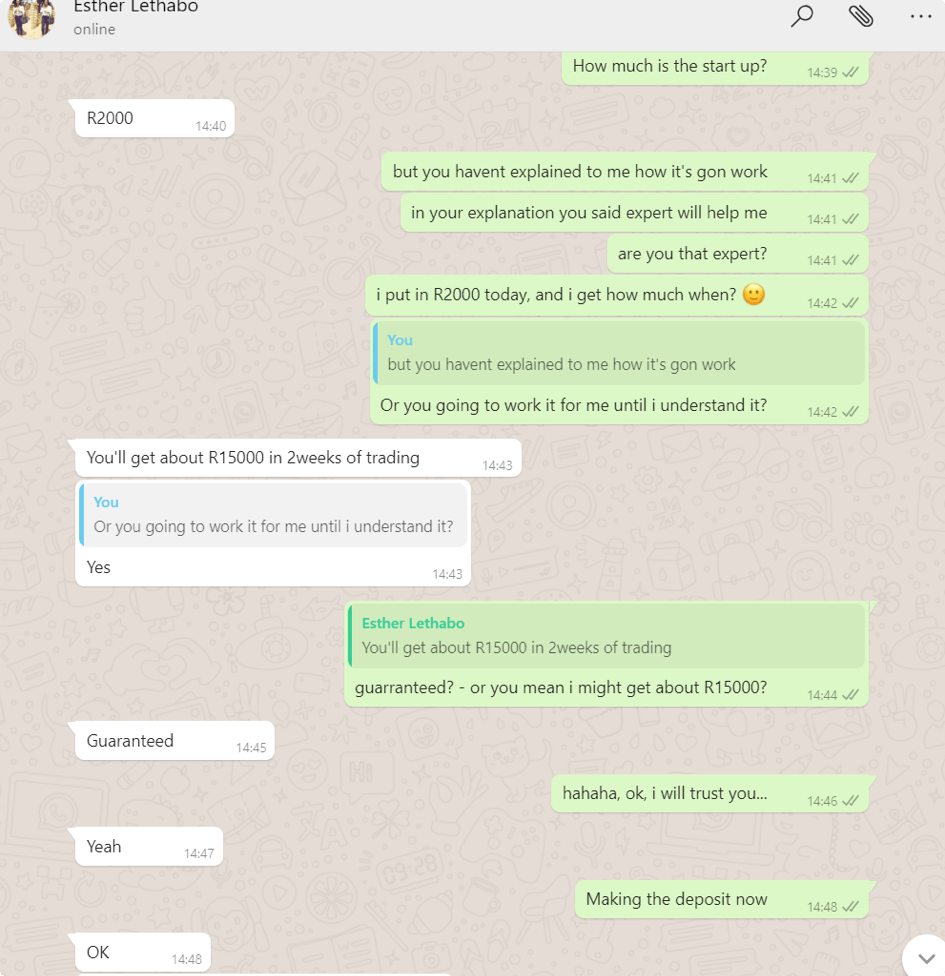

This customer in the following example explained his whole experience in full detail by sending us screenshots of a WhatsApp conversation he had with a scammer. These types of tickets enable us to learn a lot without having to write a script or without having to get customers into the office.

Making learnings collective knowledge

Collective knowledge is powerful as it enables everyone to act as one team with one mission. Stories like Anna’s build shared empathy, allowing us to solve problems with the same context and background.

But how do you achieve this state of collective knowledge in an environment where everyone is super busy solving a variety of other problems that may not necessarily all be related?

We’ve found that frequently sharing tidbits of information with the team is quite useful.

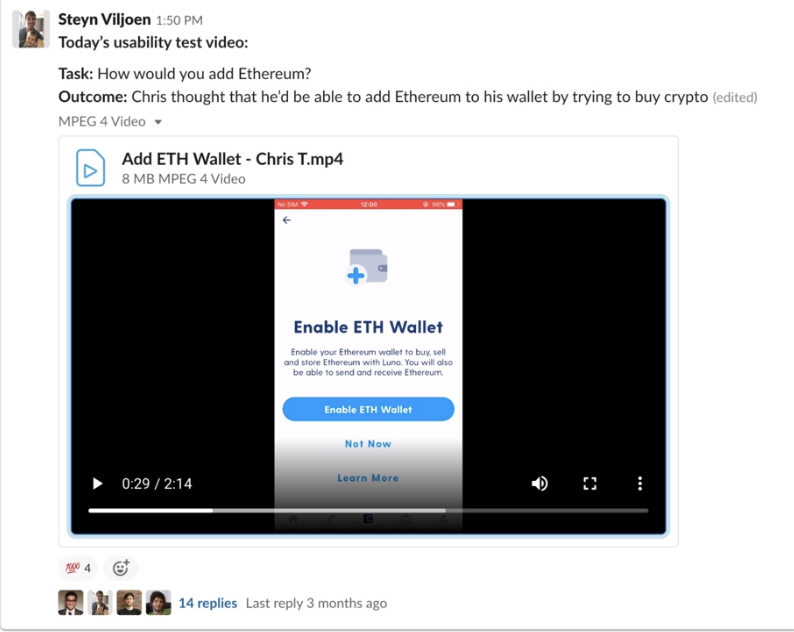

Previously, for example, we’ve broken down hours of customer research into a series of 1-3 minute snippets. We also regularly share and talk about learnings from store reviews, tickets, and things we pick up in the wild.

Slack works well for both of these examples as it turns individual learnings into collective knowledge, enabling us to build context, solve problems, and ultimately build a better product.

3. The magic of invisible interfaces

Over time, we’ve come to rely on two key learnings to create a more secure product:

- Telling someone not to click on a link is not enough

- Our customers’ primary goals are not to make their accounts more secure

Educating our customers is not enough

We work hard to inform our community to never share sensitive information, or click on suspicious links, and to always check the website’s URL.

Even though we believe that informing our customers is critical, it’s not enough. One fine day, a customer might be in a great mood; their deception detection radar might be ‘down’ and biology might work against them.

Understanding our customers’ goals

We would love to think that the number one thing our customers want to do is to increase the security of their account, but sadly this is not a goal the majority of them have.

Therefore, understanding our customers’ goals is as important as knowing that merely educating them about good security behaviour, isn’t enough. These two learnings are tightly coupled, and our understanding of them helps us design a more secure product without the customer having to make it their goal.

So how do we do this?

Examples of invisible UIs

Teams often try and build features that are reliant on user interfaces. But what if you don’t need a UI at all? Or less of it? What if the product can be so seamless that it becomes indistinguishable from magic? And what might that look like to help make people’s money more secure?

There are two specific ways in which we tackle these questions: Smart defaults and smart features.

A “smart default” is, very simply, a feature that’s default state is set smartly based on the knowledge we have of our customers.

Here’s an example: From our data, we know that most of our customers buy and hold their crypto. Based on this knowledge, by default, we disable the ability to send cryptocurrency out of your Luno account. This means that even if an attacker gets into a customer’s account, they’ll first have to authorise enabling the ability to send, thus keeping the customer’s money safer without them having to do anything at all.

Of course, this doesn’t mean people are allowed to be reckless, but it might help in cases where the deception detection radar fails to do the right job.

A “smart feature” is one that doesn’t require a user interface, or is so woven into the customer’s regular journeys that they don’t have to go and look for it.

Here’s an example: From our data, we know that few people enable two-factor authentication (2FA) to increase the security of their accounts. So instead of expecting them to go and look for it in settings, we can make it part of their natural flow, asking them to increase their security right after their first buy.

For both of these features geared towards helping our customers, we need to take a step back and understand what their primary motivations and needs are.

4. The value of Dev/Design collaboration

Understanding our customers’ motivations and needs is one thing, but working together as a team to get things done correctly and into the hands of a customer is quite another.

We’ve seen this firsthand with our 2FA flow.

We know the friction around enabling 2FA is quite high and, for someone who “doesn’t have millions”, it’s simply not worth the effort. We wanted to validate whether reducing 2FA friction would increase adoption and reduce security incidents, so we started to investigate how we could optimise this flow.

We spent quite a bit of time understanding our current flow and mapping out the 2FA flows of other companies. We took those learnings and created a low fidelity prototype in one of the design tools we use, to test with customers. We soon realised that because setting up 2FA requires three different apps (app store, authentication app and Luno), we needed a higher fidelity prototype.

I chatted to the rest of the product team about the problem, and we agreed that building a fully functional prototype in code would allow us to test and validate our hypothesis in a more realistic environment, inviting better quality feedback.

Rapid prototyping

I started to work with one of our iOS engineers and got a fully functional prototype up and running within two to three days. Because setting up 2FA is such a universal process that’s applicable to such a wide audience, getting people to test it with was super easy.

It was fun as well – I would run around the office asking team members at random, this simple question: Can you show me how you would enable two-factor authentication for this app?

The very first test already showed us where and how we failed to communicate the different steps of the process. We immediately updated the copy or flow and created another build for more testing.

The results

Within a few days, we went through multiple iterations and a mixed bag of feedback. Some people who rarely use 2FA would breeze through the flow while other, more advanced users, would get lost entirely.

It was after seeing such a diverse range of feedback that I started to realise trying to optimise 2FA to increase adoption might be a textbook example of Tesler’s law. It states that for any system there’s a certain amount of complexity which cannot be reduced. Perhaps we were fighting against natural forces here.

After discussing with the team, we decided to stop spending more time trying to optimise a flow that might only have a marginal effect on 2FA adoption, and agreed instead to shift our attention on something that wouldn’t require 2FA at all to keep our customers’ money safer. I can’t say too much about it at the moment, but it combines our learnings from smart features and smart defaults. More on this coming soon!

If it wasn’t for the close collaboration with our developers on this feature, we might never have been able to get such good quality feedback so quickly. Of course, not all testing requires this level of fidelity, but following our gut and being brave enough to try it saved us a lot of time and money. We could have spent a lot of time building this flow for all platforms and rolled it out to all our customers, only to learn that it had a marginal effect on adoption and security incidents.

Fortunately, we didn’t – and herein lies the value in testing.

Getting into the minds of customers

Creating products for a diverse set of people is hard. Doing this for a diverse set of people who experience a full array of emotions is even harder. But understanding how these emotions affect their daily interactions, make a world of difference.

There are many ways to help people increase the security of their money, but the one non-negotiable is getting into the minds of customers. This is what led us to closer cross-team collaboration at Luno, smarter features and happier customers.

If you’ve ever fallen victim to a phishing or an account-related scam, we’d like to chat to you in order to gain more insight and better understand your experience.

You can opt in to our feedback programme here.

To the moon! ?

Discover

Discover Help Centre

Help Centre Status

Status Company

Company Careers

Careers Press

Press